Ralph is Eating the World

If you're a skeptic who thinks autonomous AI coding sounds like a loony hallucination factory, you're not alone. The prevailing wisdom goes: hand the reins to an LLM, walk away, and return to find your codebase "improved" into an unmaintainable disaster.

I believed this too. Until I watched Ralph ship a production-ready web app overnight while I slept.

The Loop That Ate Everything

In 2011, Marc Andreessen declared that "Software is eating the world". Every industry would be disrupted by companies built on code. He was right. Netflix ate Blockbuster, Spotify ate Tower Records, Amazon ate everything.

A decade later, Jensen Huang extended the thesis: "AI is going to eat software". The tools we use to build software would themselves be consumed by machine intelligence.

But something more recursive is happening now. A pattern is emerging that's eating the old paradigm of AI-assisted development itself. It's called the Ralph Wiggum technique, and it's changing how production software gets shipped.

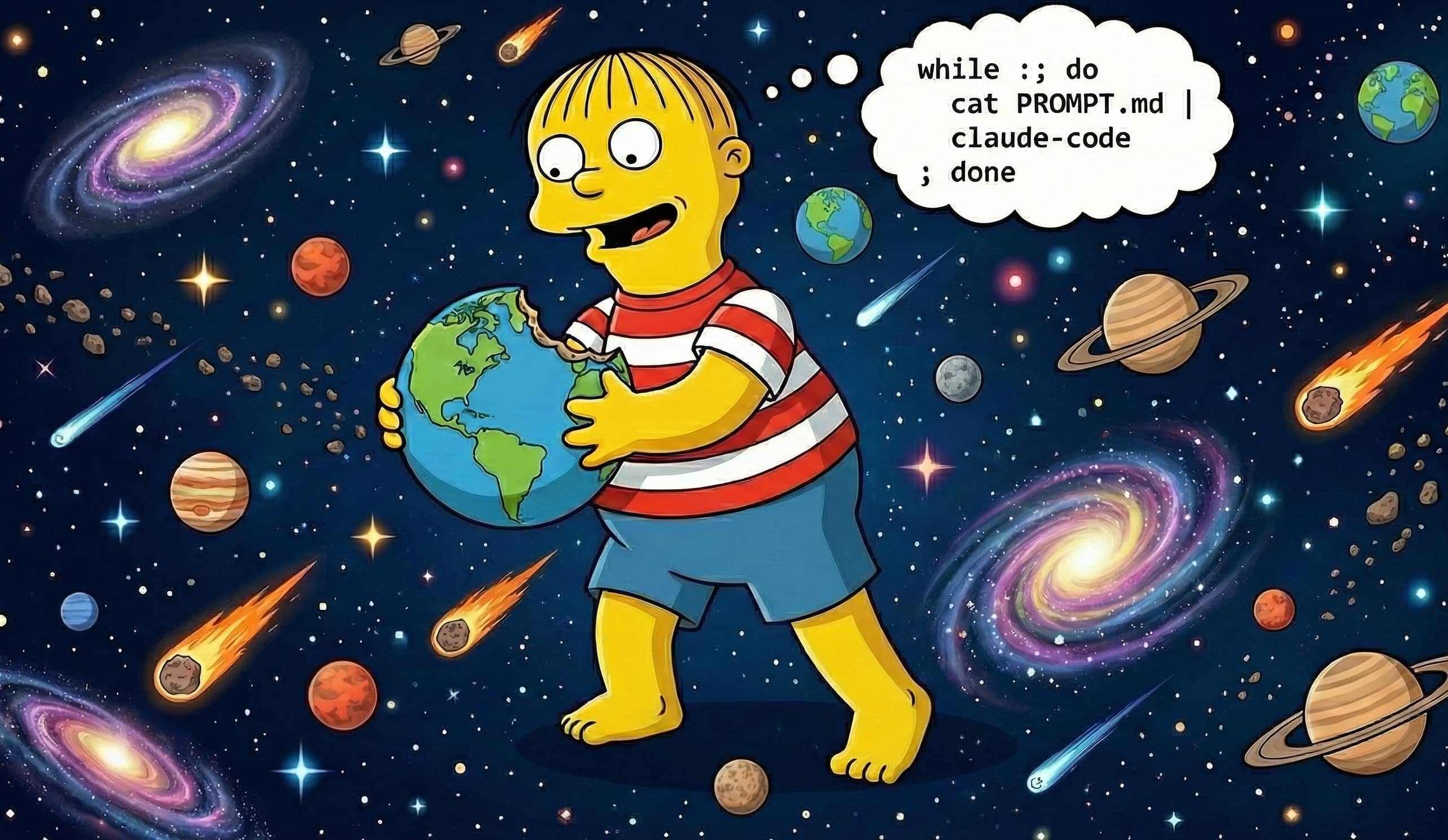

while :; do cat PROMPT.md | claude-code ; done

That's it. Five lines of bash. The entire innovation is not stopping.

Why "Ralph Wiggum"?

The technique was born at a Y Combinator hackathon around May 2025, created by Australian developer Geoffrey Huntley. He named it after the Simpsons character who is "perpetually confused, always making mistakes, but never stopping."

The philosophy is counterintuitive: deterministically bad in an undeterministic world is better than unpredictably good.

Traditional AI-assisted development works like this: you give Claude a task, watch it work, intervene when it goes off track, course-correct, repeat. Human-in-the-loop. Careful. Controlled.

Ralph inverts this entirely. You define the end state with surgical precision, then let the agent iterate autonomously until it gets there. Human-on-the-loop. You're not directing traffic. You're defining the destination.

"Building software with Ralph requires faith and belief in eventual consistency. Ralph will test you." Geoffrey Huntley

The Evidence Parade

The results are hard to dismiss.

$50k in value, $297 in API costs. One developer reported delivering a contract worth fifty thousand dollars using Ralph loops, burning through less than three hundred dollars in Claude API calls.

Six repositories generated overnight. At the hackathon where Ralph was born, teams shipped entire product portfolios while sleeping. Not prototypes. Working software with tests, documentation, and deployment configurations.

React v16 to v19 migration: 14 hours, zero human input. A developer kicked off a major framework migration before bed and woke up to a passing test suite. The kind of tedious, error-prone work that typically consumes sprints was handled autonomously.

Integration tests to unit tests: 4 minutes to 2 seconds. While the developer slept, Ralph refactored an entire test suite from slow integration tests to fast unit tests. Runtime dropped by two orders of magnitude.

But the most striking example is CURSED, a programming language that Ralph built and then learned to program in.

The CURSED project ran autonomously for three months. Ralph created a functional compiler with Gen Z slang keywords (slay = function, sus = variable, based = true), LLVM compilation, and a standard library. Then Ralph wrote programs in its own language. A language that never appeared in any training data.

This isn't autocomplete. This is something else.

The Success Stories Come With a Caveat

These are the wins that get shared. Plenty of loops fail, burn API costs, and produce nothing usable. The difference isn't the technique. It's the operator.

LLMs are mirrors of operator skill. The quality of output directly reflects the quality of input. An experienced engineer can be surprisingly bad at AI, writing vague prompts that produce vague results. A junior developer with strong prompt engineering ability can outperform the senior engineer using the same model.

The hiring question shifts. You're not just looking for people who can code. You're looking for people who can write prompts that converge toward solutions. The ability to precisely specify an end state, to define "done" with enough clarity that an agent can find its way there, is becoming a core engineering competency.

Stop directing Claude step-by-step. Define what success looks like and let Ralph figure out how to get there.

The Implementation Pattern

The practitioners who've made Ralph work at scale share common patterns.

Define "Done" First. Matt Pocock, who popularized many of these techniques, uses a PRD-based approach: a JSON file of user stories, each with a passes: false flag. Ralph iterates until all flags flip to true. The shift is from planning how to specifying what.

src/

├── requirements/

│ └── user-stories.json # The contract

├── PROMPT.md # Ralph's instructions

└── progress.txt # Append-only history

Guide Step Size. Small, atomic tasks. Each PRD item must fit within a single context window, or you get context rot (degraded performance as the model loses track of earlier instructions). Good scope: "Add database column and migration." Bad scope: "Build entire dashboard."

Commit Each Iteration. Non-negotiable. If Ralph goes off the rails at iteration 47, you need to be able to git checkout back to iteration 46. Each commit is a checkpoint.

Keep CI Green. Every commit must pass tests and type checks. Broken code compounds across iterations. If you let entropy accumulate, Ralph will build on broken foundations.

Append to Progress. Use the verb "append" explicitly, or Ralph will overwrite history. progress.txt becomes your audit trail. What was attempted, what worked, what failed.

Build End-to-End. Complete features vertically, not layers horizontally. A working feature is feedback. A half-built layer is debt.

Use Human-in-the-Loop for Architecture. Early decisions cascade. When you're defining the shape of the system, stay engaged. Once the architecture is set, let Ralph handle the implementation.

Choose Your Loop Mode. Practitioners distinguish between HITL Ralph (pair programming with persistence, single iterations, review and adjust) and AFK Ralph (overnight runs, capped iterations, notifications when finished). HITL is for exploration. AFK is where the leverage lives.

When Ralph Fails

Ralph isn't magic. The technique fails predictably in specific situations.

Ambiguous requirements. If you can't define "done" precisely, Ralph will iterate forever toward nothing. The mirror reflects your clarity.

Architectural decisions. Ralph optimizes locally. It can't see the system-level implications of early choices. Keep humans on the loop for structure.

Security-sensitive code. Authentication, authorization, cryptography. These require human review. Ralph can help, but the responsibility stays with you.

Novel problem domains. If the solution doesn't exist in training data, Ralph will hallucinate confidently. Know when you're at the frontier.

The pattern that works: PRD Definition (Human) → Ralph Loop Execution (Sandboxed) → Quality Gates (Automated) → Human Review → Merge Decision (Human).

Never auto-merge to production. Ralph ships to staging. Humans ship to prod.

When Ralph Isn't the Answer

Sometimes Ralph fails not because the loop breaks, but because the setup cost exceeds the benefit.

Small scripts where writing the prompt takes longer than writing the code. Regulated codebases requiring audit trails for every change. Teams without API budget headroom. Projects where requirements shift faster than loops complete. Contexts where you need to understand the code, not just ship it.

Know when you're holding a hammer and not everything is a nail.

The Meta-Recursion

Software ate the world. AI is eating software. And now Ralph is eating the old paradigm of AI-assisted development.

The victims are careful, step-by-step workflows. The elaborate multi-agent orchestrators. The complex frameworks for managing AI assistants. All of it consumed by five lines of bash and the insight that not stopping is the innovation.

"How it started: Swarms, multi-agent orchestrators, complex frameworks. How it's going: Ralph Wiggum." Matt Pocock

What Andreessen observed about software disruption applies here. The incumbents (traditional development workflows) look strong precisely because they've been refined over decades. But that refinement is a local maximum. The slope that got you here won't get you there.

Getting Started

You need three things to run your first loop:

- Claude Code CLI with a Pro or Team subscription ($20/month gets you started)

- A boring task that would take you 2-3 hours manually

- A clear definition of done in three sentences or less

Start with $50-100 in API budget for your first real loop. Pick something you've done before. Write the prompt like you're explaining it to a competent junior developer who has never seen your codebase.

A healthy loop shows diminishing errors per iteration and converges within 10-20 cycles for small tasks. If errors plateau or increase, your prompt needs work.

My claude_superpowers repo has the implementation: ralph-loop.sh for the iteration script, prompt templates to get started, and production-readiness skills for structured audits. Clone it, copy the workflow folder to your project, and run your first loop tonight.

Resources Worth Reading

From the source:

- Geoffrey Huntley's original Ralph post. The philosophy behind the technique.

- The CURSED story. Three months of autonomous loop, one programming language.

Community deep dives:

- A Brief History of Ralph. HumanLayer's timeline from June 2025 to present.

- Ralph Wiggum: Autonomous Loops for Claude Code. Technical breakdown of the Stop hook mechanism.

Follow the practitioners:

- @GeoffreyHuntley. The creator, posting updates and experiments.

- @mattpocockuk. TypeScript educator pushing Ralph + Opus 4.5.

The question isn't whether to adopt autonomous coding loops. The question is how quickly you can learn to define "done" with enough precision that a tireless agent can find its way there.

Ralph will test you. Ralph will fail. And then Ralph will ship.

Discussion

Comments are powered by GitHub Issues. Join the conversation by opening an issue.

⊹Add Comment via GitHub